Load data#

DNIKit pipelines and algorithms run using

lazy evaluation, consuming data in

Batches so as to scale computation for large dataset sizes.

The entity responsible for producing Batches of data is called a

Producer. From here, the data can be transformed in

a DNIKit pipeline, such as pre- or post-processors, model

inference, and more.

DNIKit uses Producers to connect datasets with the rest of DNIKit

in Batches.

To run inference with a model outside of dnikit, define a

Producer that produces Batches

of model responses instead of data samples.

Set up a Producer of Batches#

Producers can simply be generator functions

that take a batch_size parameter and yield Batch objects;

they may also be callable classes (class with __call__ method).

Here’s an example generator function that loads the

CIFAR10 dataset using Keras:

from keras.datasets import cifar10

from dnikit.base import Batch, Producer

def cifar10_dataset(batch_size: int): # Producer

# Download CIFAR-10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

dataset_size = len(x_train)

# Yield batches with an "images" feature,

# of length batch_size or lower

for i in range(0, dataset_size, batch_size):

j = min(dataset_size, i + batch_size)

batch_data = {

"images": x_train[i:j, ...]

}

yield Batch(batch_data)

This batch generator can be used in a pipeline

by passing it as the first argument:

my_processing_pipeline = pipeline(

cifar10_dataset,

... # later steps here

)

DNIKit provides a built-in Producer, ImageProducer,

to load all images from a local directory. By default, it will do a

recursive search through all subdirectories. For instance, if the MNIST dataset

is stored locally. Here is an example use of ImageProducer:

from dnikit.base import ImageProducer

mnist_dataset = ImageProducer('path/to/mnist/directory') # Producer

For an example of creating a custom Producer that attaches

metadata (such as labels) to batches, see

Creating a Custom Producer.

DNIKit also provides mechanisms for transforming between a PyTorch dataset and a Producer:

ProducerTorchDataset and

TorchProducer.

Format of Batch objects#

Batches are samples of data; whether audio, images, text,

embeddings, responses, labels, etc. At their most basic, Batches

wrap dictionaries that map from str types to numpy arrays.

For instance, a Batch can be created

by passing a dictionary of the feature fields:

import numpy as np

from dnikit.base import Batch

# Create a batch of words with 3 samples

words = np.array(["cat", "dog", "elephant"])

data = {"words": words}

batch = Batch(data)

In practice, however, it’s typical to yield batches

inside a generator method. For instance, here’s a random number generator

that produces 4096 samples of random floats from 0 to 1.0:

import numpy as np

from dnikit.base import Batch

def rnd_num_batch_generator(batch_size: int):

max_samples = 4096

for ii in range(0, max_samples, batch_size):

local_batch_size = min(max_samples, ii + batch_size)

random_floats = np.random.rand(local_batch_size)

batch_data = {

"samples": random_floats # of shape (local_batch_size,)

}

yield Batch(batch_data)

As its name indicates, Batch contains several data elements. Following

deep learning terminology, the number of elements in a batch is called

batch_size in DNIKit.

Batch expects

the 0th-dimension of every value (a numpy array) in every field to denote

the batch_size

(same as PyTorch, TensorFlow, and other ML frameworks).

Further, the batch_size of all fields in a

Batch must be the same, or DNIKit will raise an error.

Besides these regular fields in a Batch,

Batches

can also contain snapshots and

metadata.

Batch snapshots capture a specific state of a Batch as

it’s going through the DNIKit pipeline

(see below). For instance, model output can be captured in a

snapshot before sending data into postprocessing.

Batch metadata holds metadata about a data sample. For example,

label metadata about a data sample can be added as

Batch.metadata. To attach metadata to batches,

use a Batch.Builder to create the batch:

import numpy as np

from dnikit.base import Batch

# Load data and labels

images = np.zeros((3, 64, 64, 3))

fine_class = np.array(["hawk", "ferret", "rattlesnake"])

coarse_class = np.array(["bird", "mammal", "snake"])

# Build a batch of 3 images, attaching labels as metadata:

builder = Batch.Builder()

# Add a field (feature)

builder.fields["images"] = images

# Attach labels

builder.metadata[Batch.StdKeys.LABELS] = {

"fine": fine_class,

"coarse": coarse_class

}

# Create batch

batch = builder.make_batch()

Here is a visualization of a new sample Batch with batch size of 32,

two fields, metadata and a snapshot.

Batch Sample with 32 elements

Key |

Value |

|---|---|

|

|

|

|

Key |

Value |

|---|---|

|

Another |

Key |

Value |

|---|---|

|

A sequence of Hashable unique identifiers for each data sample. |

|

A mapping of label dimensions to labels for each

data sample in the batch. For example,

|

|

A mapping of |

Set up a pipeline#

After a data Producer has been set up,

the producer can feed batches into a DNIKit-loaded

model (and through any preprocessing steps)

by setting up a pipeline, e.g.:

from dnikit.base import pipeline

from dnikit_tensorflow import load_tf_model_from_path

producer = ...

preprocessing = ... # a dnikit.processing.Processor

model = load_tf_model_from_path(...)

my_pipeline = pipeline(

producer,

preprocessing,

model(response_name)

)

The pipeline my_pipeline will only begin to pull

batches and perform inference when passed to an Introspector.

In the preceding example, preprocessing is a batch

Processor that transforms the data

in the batches. DNIKit ships with many Processors

to apply common data pre-processing and

post-processing techniques. These include resizing,

concatenation,

pooling,

normalization,

caching,

among others. To write a custom Processor,

see the Batch Processing page.

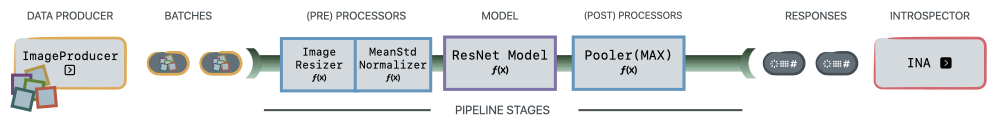

Example pipeline#

The following is a sample instantiation of a pipeline with DNIKit:

This pipeline can be implemented, given some Model "model",

with just a few lines of code:

from dnikit.base import ImageProducer

from dnikit.processors import ImageResizer, MeanStdNormalizer, Pooler

from dnikit.introspectors import IUA

from dnikit_tensorflow import load_tf_model_from_path

model = load_tf_model_from_path("/path/to/resnet/model")

response_producer = pipeline(

ImageProducer("/path/to/dataset/images"),

ImageResizer(pixel_format=ImageFormat.CHW, size=(32, 32)),

MeanStdNormalizer(mean=0.5, std=1.0),

model(),

Pooler(dim=1, method=Pooler.Method.AVERAGE),

)

# Data is only consumed and processed at this point.

result = IUA.introspect(response_producer)

The pipeline corresponds to the following stages that are called

PipelineStages:

ImageProduceris used to load a directory of images (alternatively, it’s possible to implement a custom Producer!).The

batchesare pre-processed with anImageResizerand aMeanStdNormalizer.Inference is run on a TensorFlow ResNet

model, which can be loaded withload_tf_model_from_path().finally, the model results are post-processed by running average pooling across the channel dimension of the model responses with a DNIKit

Pooler.

This pipeline is fed into Inactive Unit Analysis (IUA),

an introspector which checks if there were any inactive neurons in the model. Recall that until

introspect is called, no data will be consumed or

processed. pipeline() simply sets up the processing graph that will

be executed by the introspectors.

Notice that in the example, four pipeline stages are

used, but in DNIKit as many, or as few, stages as the user needs can be chained together.

Note

In fact, if a method to generate responses from the model is already set up,

it’s not necessary to use DNIKit’s Model abstraction

and instead it makes sense to create a custom

Producer of responses which may be fed directly to an

Introspector. This might also be a good option

for model formats that DNIKit does not currently support, or for connecting

to a model hosted on the cloud and fetching responses asynchronously.

Next Steps#

After setting up a Producer,

loading a model into DNIKit, and thinking about a

pipeline, the next step is to run an

Introspector.

Learn more about introspection in the next section.