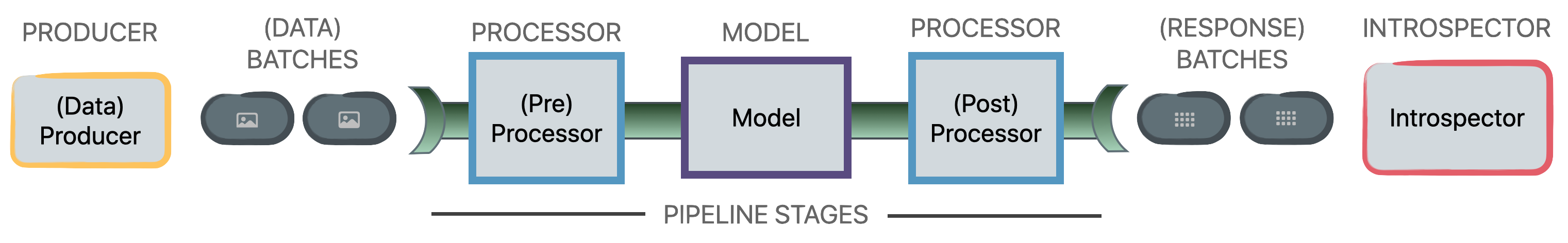

DNIKit Architecture Overview#

This page contains an overview of the DNIKit architecture and how pieces work together. Please see the following three pages for more detailed information:

Architecture#

DNIKit begins with a Producer that is in

charge of generating Batches of data for the rest of DNIKit to process

or consume.

DNIKit only loads, processes and consumes data when it needs to. This is known as lazy evaluation. Using lazy evaluation avoids processing, running inference and analyzing the full dataset in one fell swoop, as these operations can easily require more than the system’s available memory.

Instead, a Producer generates small

Batches of data, which can be processed and analyzed with the

available memory as part of a DNIKit pipeline. A

pipeline is a

composition of Batch transformations that we call

PipelineStages.

Example PipelineStages could be various pre- or post-

Processors, or Model inference.

These all apply transformations to a Batch and output a new

Batch (typically model responses).

Finally, DNIKit’s Introspectors will analyze input

Batches (usually Batches of model

responses) to provide insights about the dataset and/or model.

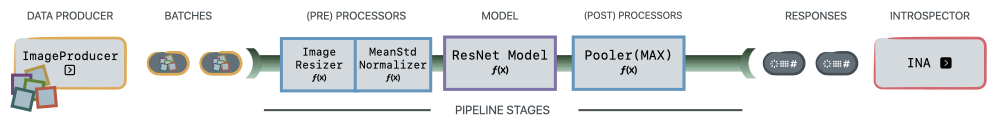

Below shows a generic and example DNIKit pipeline setup.

GENERIC

EXAMPLE

See the next sections in these How To Guides for information about each of DNIKit’s components.