Deploying to Core ML

With Core ML, you can integrate machine learning models into your macOS, iOS, watchOS, and tvOS app. Classification and regression models created in Turi Create can be exported for use in Core ML. These include

Classifiers

Regression

Export models in Core ML format with the

export_coreml function.

model.export_coreml('MyModel.mlmodel')Once the model is exported, you can also edit model metadata (author, license, and description) using coremltools. This example contains more details on how to do so.

Using Core ML

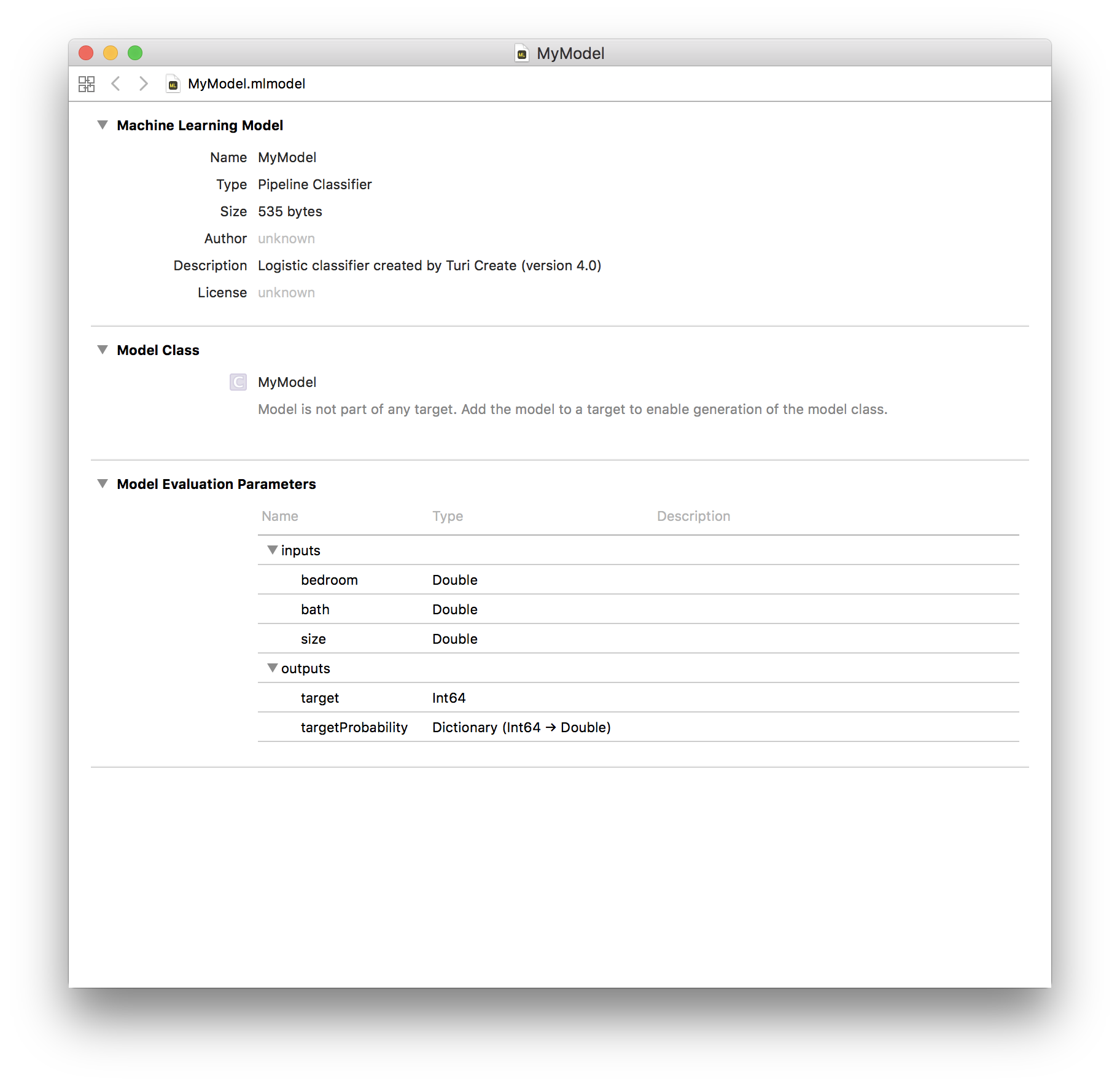

Add the model to your Xcode project by dragging the model into the project navigator. You can see information about the model—including the model type and its expected inputs and outputs—by opening the model in Xcode.

Xcode also uses information about the model’s inputs and outputs to automatically generate a custom programmatic interface to the model, which you use to interact with the model in your code. You can load the model with the following code:

let model = MyModel()The inputs to the model in Core ML are extracted from the input feature

names in Turi Create. For example, if you have a model in Turi Create

that requires 3 inputs, bedroom, bath, and size, the

resulting code you would need to use in Xcode to make a prediction is

the following:

guard let output = try? model.prediction(bedroom: 1.0, bath: 2.0, size: 1200) else {

fatalError("Unexpected runtime error.")

}Refer to the Core ML sample application for more details on using classifiers and regressors in Core ML.